Pipeline schema

Pipeline schema files describe the structure and validation constraints of your workflow parameters. They are used to validate parameters before launch to prevent software or pipelines from failing in unexpected ways at runtime.

You can populate the parameters in the pipeline by uploading a YAML or JSON file, or in the Seqera Platform interface. The platform uses your pipeline schema to build a bespoke launchpad parameters form.

See nf-core/rnaseq as an example of the pipeline parameters that can be represented by a JSON schema file.

Building pipeline schema files

The pipeline schema is based on json-schema.org syntax, with some additional conventions. While you can create your pipeline schema manually, we highly recommend using nf-core tools, a toolset for developing Nextflow pipelines built by the nf-core community.

When you run the nf-core schema build command in your pipeline root directory, the tool collects your pipeline parameters and gives you interactive prompts about missing or unexpected parameters. If no existing schema file is found, the tool creates one for you. The schema build commands include the option to validate and lint your schema file according to best practice guidelines from the nf-core community.

The nf-core community creates the schema builder but it can be used with any Nextflow pipeline.

Customize pipeline schema

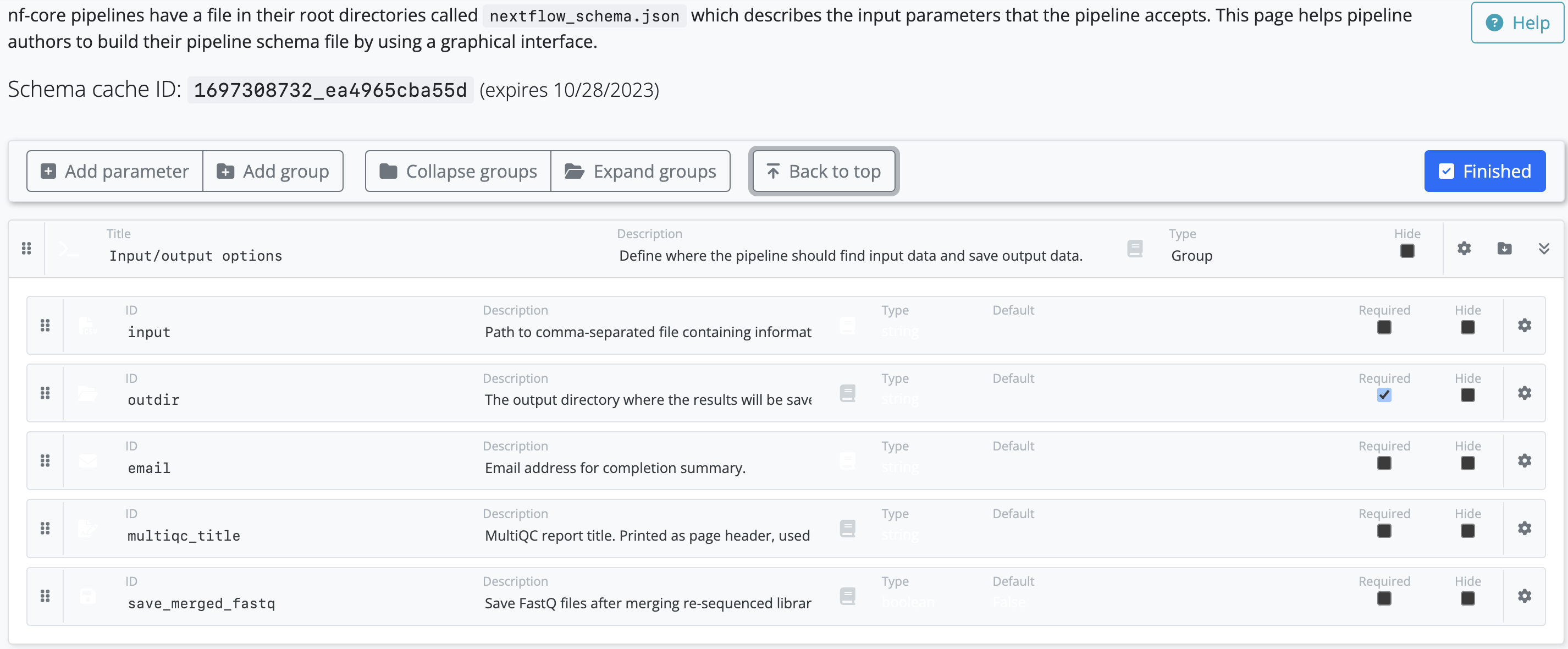

When the skeleton pipeline schema file has been built with nf-core schema build, the command line tool will prompt you to open a graphical schema editor on the nf-core website.

Leave the command line tool running in the background as it checks the status of your schema on the website. When you select Finished on the schema editor page, your changes are saved to the schema file locally.